A “Fuller” Look at Education Issues: Shaky Methods, Shaky Motives: A Recap of My Critique of the NCTQ’s Review of Teacher Preparation Programs

Preface

The following is based on my complete study published in the Journal of Teacher Education. This version leaves out some examples, technical points, and suggestions for an approach to evaluating preparation programs. Sage Journals has been kind enough to make the complete study available for free to the public for a one month period. The study can be found at: http://tinyurl.com/mhqjyn6

Introduction

After last year’s release of the NCTQ’s ratings of teacher preparation programs, headlines and leading remarks about US teacher preparation programs proclaimed: “Teacher prep programs get failing marks” (Sanchez, 2013); “University programs that train U.S. teachers get mediocre marks in first-ever ratings” (Layton, 2013); and, “The nation’s teacher-training programs do not adequately prepare would-be educators for the classroom, even as they produce almost triple the number of graduates needed” (Elliot, 2013).

Critics of traditional teacher preparation have used the report as evidence that US teacher preparation is completely broken and we need to radically change traditional university-based programs and/or abandon traditional programs in favor of marketplace of a wide array of providers. For example, Arthur Levine (2013) wrote:

The NCTQ described a field in disarray with low admission standards, a crazy quilt of varying and inconsistent programs, and disagreement on issues as basic as how to prepare teachers or what skills and knowledge they need to be effective. The report found few excellent teacher-education programs, and many more that were failing. Most were rated as mediocre or poor.

While the NCTQ critiques are not substantially different than many previous calls for reform, the current calls for reform come at a time of increased belief that public schools have failed, partially as a result of traditional teacher preparation programs and “we need to end the effective monopoly that education schools have on teacher training. Policymakers must foster a robust marketplace of providers from which schools and school districts can choose candidates” (Kamras & Rotherham, 2007).

NCTQ’s efforts have raised a number of concerns by researchers such that a wide array of critiques were posted and published after last year’s report, Even before the report was released, the concern was so great among teacher preparation personnel that most programs refused to participate in the effort. Indeed, only 10% of the more than 1,100 programs identified by NCTQ fully participated (American Association of Colleges of Teacher Education [AACTE], 2013). Based on what I have heard from programs that participated last year, I suspect the participation rate decreased this year.

My critique is separated into two major sections: creation of standards and methodological issues.

Critique 1: Creation of NCTQ Standards

This section focuses on the rationale for the study design. My critique is focused on three issues in particular: the study’s focus on inputs rather than outcomes; the lack of a solid research foundation for the NCTQ standards; research and standards omitted by NCTQ; and incorrect application of research findings in adopting standards and rating programs.

A. Focus on Inputs.

One major criticism of the report is the almost unilateral focus on inputs and the lack of any serious consideration of outcomes. The 2014 report will include outcome measures. The NCTQ descriptions of the outcomes measures included in the 2014 report, however, imply that the outcome measure adopted focuses on identifying programs that collect outcome measures and use them in program improvement efforts. While the addition of this particular “outcome” measure is a useful indicator, the NCTQ report still does not employ true outcome measures (e.g., percentage of graduates employed, retention rate of graduates, estimates of graduates’ effectiveness in improving student outcomes, behaviors of graduates in the classroom, etc) as a means of identifying the effectiveness of programs.

While inputs are clearly important to quality preparation, the outcomes are what truly differentiate quality preparation from poor preparation. NCTQ rightly claims that assessing the quality of every preparation program in the country based on outcome measures is currently impossible without the investment of a substantial amount of money. I concur with NCTQ on this point. For example, states would have to collect and make available a wide array of very detailed information. This would be financially costly to states and programs—precisely at a time when finding to these entities has been substantially decreased.

Further, even if such data was available, there are serious impediments to accurately assessing the independent effect of programs on these outcomes. Indeed, researchers continue to argue about the accuracy of efforts to estimate program efficacy based on outcome measure. Further, I could not find any studies that attempt to assess the effectiveness of individual programs based on placement or retention rates. This is likely due to serious methodological barriers to accurately estimating effectiveness based on these measures.

Thus, even if NCTQ included outcomes measures in the rankings, the outcomes measures could very well be inaccurate.

Given such difficulties, the exclusion of outcome measures in the report was understandable. However, based on such information, NCTQ should have decided to either: (a) be patient and allow research to catch up with their desire to assess programs, or (b) express that their assessment was based purely on inputs, thus should not be considered an indication of program quality. Yet, NCTQ chose to do neither. Rather, NCTQ claimed it can assess the quality of a preparation program’s teachers based almost entirely on inputs that are measured primarily by a review of syllabi of some, but not all, courses taken by students in a program. This would, in fact, be reasonable if and only if a large body of research had fully established causal linkages between the inputs included in the study and important outcomes such as those described above. Currently, there is simply not enough research evidence to make such a leap (Coggshall, Bivona, & Reschly, 2012), particularly when considering the scant research base linking outcomes for core subject area teachers from different preparation programs across different school levels and contexts.

This is the typical modus operandi of current supporters of increased privatization in education– NCTQ rushed headlong into making judgments without the assistance of a strong research base or any sense of caution. Indeed, as Bruce Baker astutely points out, the rationalization seems to be that doing something—regardless of the evidence—is highly preferable to doing nothing.

B. Standards Lack a Solid Research Base

The second major critique of NCTQ’s effort is the failure to ground the standards on a solid foundation of high-quality research. This is not entirely the fault of NCTQ as the research connecting inputs to outcomes is simply not robust enough to develop a clear set of standards upon which programs could be ranked or held accountable. Some may claim this is the fault of researchers, but lack of access to data, the incredible complexity in conducting such studies, and inadequate funding to support such studies seriously impedes the development of a solid research in this area.

Given the paucity of research in this area, even NCTQ admits their evaluation standards are not based on an extensive literature base. Indeed, NCTQ states:

“[Our] standards were developed over five years of study and are the result of contributions made by leading thinkers and practitioners from not just all over the nation, but also all over the world. To the extent that we can, we rely on research to guide our standards. However, the field of teacher education is not well-studied.”

Note the word “researcher” is not included in this description. NCTQ relied on thinkers and practitioners, but not researchers. Researchers are critical to the process because the history of education is replete with instances in which beliefs based on common sense turned out to be incorrect after research examined an issue.

In the full report, NCTQ provides a difficult to interpret graph about the sources of support for the various standards (See figure 38 on page 77 in the 2013 NCTQ report). The most striking revelation of the graph is that high-quality research was only a very small source for the development and adoption of the standards.

Even the “research consensus” portion of the graph, however, is quite misleading. For each standard, NCTQ (2013b) classified research for each standard in two stages: “first considering design ‘strength’ relative to several variables common to research designs, and second, considering whether student effects (as measured by external, standardized assessments) were considered” (p. 2).

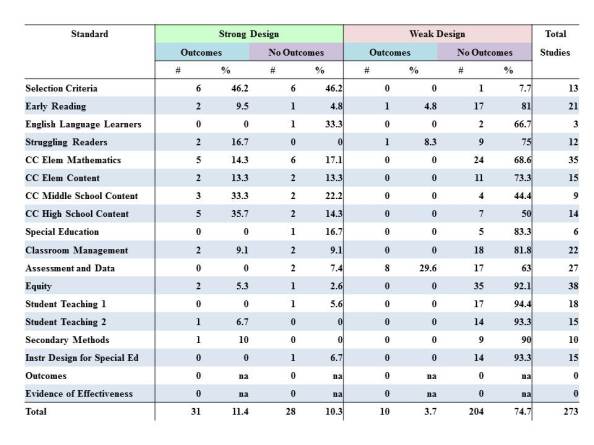

Using the tables provided by NCTQ for each standard, I created Table 1 that includes the number and percentage of studies for each standard within the four possible categories created by NCTQ. As shown in Table 1, only 9 of the 18 standards—just 50%–relied on more than one study classified as having both a strong design and a focus on student test scores. Astonishingly, 7 of the 18 standards did not have a single study classified as having both a strong design and a focus on student test scores. Only three standards—Selection Criteria, Elementary Mathematics, and high School Content—had five or more such studies. Based on my experience, I argue that only three standards have a research base even remotely substantial enough to be considered as standards for which programs should be accountable.

Table 1:Number and Percentage of Studies per Standard by Strength of Methods and Examination of Student Outcomes

Even this is misleading in two ways. First, NCTQ does not provide any connection between the cited studies and the individual indicators within each standard, the core subject areas included in the study (elementary reading and mathematics, English language arts, mathematics, science, and social studies), or the school levels addressed (elementary schools, middle schools, and high schools). In other words, the report does not detail whether a study examined all subject areas or school levels. For example, NCTQ notes a few studies on secondary content provide limited evidence of the importance of subject matter knowledge in improving student achievement. NCTQ uses the evidence to adopt an indicator that measures whether a graduate has at least 30 hours of content courses or a major in the field for every single subject area. The research cited by NCTQ, however, does not support the adoption of this indicator in English language arts or social studies. More disturbingly, NCTQ cites a study by Goldhaber (2007) as being supportive of the NCTQ high school content standard even though the study examined only elementary teachers. Thus, NCTQ simply generalized the findings to other grade levels despite no evidence that such a generalization was warranted.

A more transparent approach would have provided documentation about the statistically significant findings, the direction of the association (positive or negative), the subject area under study, and the school level associated with the finding. This approach would shed light on the conflicting evidence of the studies cited.

For example, NCTQ cites Monk’s 1994 study as substantiation for adopting an indicator for requiring at least 30 content hours or academic major in all subject areas. But Mon’s study—clearly evident in the title—only examined mathematics and science teachers. More disturbingly, NCTQ cites Harris and Sass (2011) as providing strong support for requiring high college entrance scores and content instruction at all three schooling levels (elementary-, middle-, and high- schools) despite the fact Harris and Sass (2011, p. 798) found that, “There is no evidence that teachers’ pre-service (undergraduate) training or college entrance exam scores are related to productivity.” This not only directly contradicts the standards that were supposedly supported by the study, but directly contradicts the entire thesis of the NCTQ review!

Ultimately, examples such as this suggest NCTQ simply cherry-picked the research results which supported their pre-conceived notions of what quality preparation entails.

C. Omitted Research, Missing Standards, and Narrowly Defined Standards.

Despite the relatively thin research base, NCTQ clearly did not utilize the results from the extant literature in adopting standards. For example, Eduventures (2013) contended a number of inputs that do not appear in the NCTQ study have at least some research evidence that establishes a link between the preparation activity and student outcomes. These areas include: the quality of instruction provided in teacher preparation and content courses; the provision of student support services; mentoring and induction provided by the program; and, the length of the clinical experience required of students.

Further, the NCTQ standards lack any mention of the need to address diversity issues within teacher preparation programs (Dooley, et al., 2013). For example, Dooley, et al. (2013) state:

NCTQ’s singular focus on “the five elements of reading” is neither broad nor deep—nor is it helpful for preparing teachers for diverse classrooms. Any report that talks of students as though they’re all alike—as the NCTQ review does—neglects the reality of today’s diverse classrooms.

Perhaps most importantly, the standards for reading/English and mathematics instruction are not grounded in the research that is clearly evident in the standards developed by the National Council of Teachers of English or the National Council of Teachers of Mathematics. It is unclear why NCTQ believes they know more about reading instruction than actual experts.

Critique 2: Methodological Problems

Before critiquing the methodology, I first provide an overview of the methodology employed by NCTQ in the 2013 analysis and presumably in the 2014 analysis.

A. Overview of NCTQ Methodology.

NCTQ states their review was based on 11 different data sources: syllabi, required textbooks, institutional catalogues, student teaching handbooks, student teaching evaluation forms, capstone project guidelines, state regulations, institution-district correspondence, graduate and employer surveys, state data on institutional performance, and institutional demographic data. The first phase of the study reviewed institutional websites and asked programs to provide information. The second phase of the study included a content analysis of the syllabi, ancillary materials, and the required readings.

The primary data source was syllabi, although the other 10 types of information were collected if available. Data were validated for accuracy by a team of “trained general analysts” (NCTQ, 2013a, p. 82). The analysts—purportedly experts in the field–examined the topics taught and the textbooks used. The methodology section in the full report provides a more detailed description of this process.

B. Critique of the NCTQ Methodology

These major critiques of the NCTQ methods include: the use of syllabi as an indicator of content; insufficient data/response rate; and, failure to adequately document the ranking methodology.

1. Use of Syllabi as an Indicator of Content and Quality.

NCTQ claimed that using syllabi to assess course content is an accepted research practice and syllabi likely overestimate the coverage of content during a class; thus, using syllabi is a generous method for ascertaining the enacted curriculum.

NCTQ is correct in stating that many studies on teacher preparation employ the review of syllabi in the research study. The authors of such studies, however, do not rank programs, advocate for the adoption of standards based on their findings, or even mention programs by name. The purpose of authors using such methods in the research arena is simply to investigate and inform the conversation, not to suggest programs meet a standard suggested by their findings. The standard of evidence should be much greater when identifying programs, adopting standards, and ranking programs.

Further, NCTQ has not presented any research on the degree to which syllabi accurately reflect course content, most likely because there is no easily identifiable research that addresses this issue. In fact, NCTQ’s own audit panel of experts concluded that NCTQ should, “[study] how accurately reading syllabi reflect the actual content of classroom instruction” (NCTQ Audit Panel, 2013)

There is a strategy to ensure a greater degree of accuracy when depicting course content through syllabi. This strategy is referred to as “member checks”—providing an opportunity for participants to review the findings and correct any inaccurate information.The purpose of member checks is to increase the accuracy of the information gathered as a means to improving the validity of the inferences drawn from the study. Interestingly, NCTQ conducted member checks in prior reports, but not for the 2013report. The failure to conduct member checks resulted in a large number of factual errors in the report. For example, Darling-Hammond (2013) stated:

It is clear as reports come in from programs that NCTQ staff made serious mistakes in its reviews of nearly every institution. Because they refused to check the data – or even share it – with institutions ahead of time, they published badly flawed information without the fundamental concerns for accuracy that any serious research enterprise would insist upon.

Thus, NCTQ abandoned the one strategy that was necessary to provide credibility to their use of syllabi review.

In addition, as any teacher well knows, engaging students in learning particular content is by no means any guarantee that students learn the content and are able to apply the content in real-world situations. NCTQ did not obtain such data—not even cursory data such as licensure/certification test scores.

2. Insufficient Data and Response Rate.

Even if NCTQ did, in fact, send review teams to programs and interviewed graduates (as is being claimed for the 2014 report), we know from research that making inferences about a program would require the collection of information from a substantial percentage of graduates. Further, a comparison would need to be made between respondents and non-respondents. NCTQ provided no evidence about interviews, response rates, protocols, and other such basic information typically provided by researchers. Thus, we have no evidence that data collected in this way would allow for valid and reliable inferences to be made.

3. Relationship between NCTQ Stars and Program Outcomes.

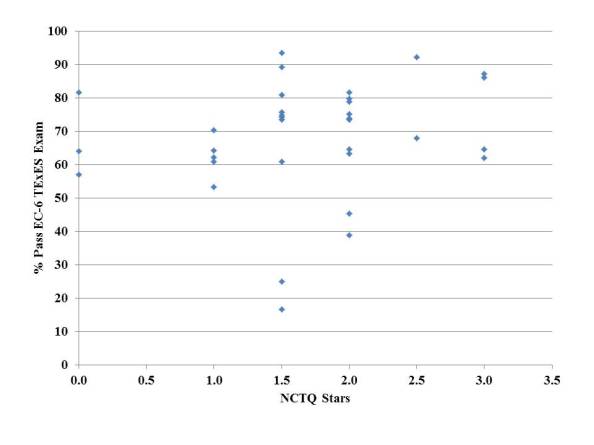

Despite NCTQ’s strong critique that too few programs assess their own effectiveness, NCTQ failed to assess their own findings by examining the relationship between their rankings and available evidence on outcomes. One outcome that is often available from state education agencies and even the Title II reports from the US Department of Education is the percentage of graduates passing licensure/certification examinations on the first attempt or on the most recent attempt. Texas has long provided information on initial passing rates as part of the Accountability System for Educator Preparation adopted in 1993. Using these data, I compared the initial preparation program passing rates on the generalist examination for those seeking certification for early childhood through the 6th grade in 2010 and the number of NCTQ stars awarded.

While certification scores are an imperfect indicator of program quality, the percentage of graduates passing a content certification examination on the initial attempt certainly provides some information about a program’s selection criteria and/or efforts to ensure graduates have had adequate access to content instruction during their preparation. At the very least, a program with a low passing rate would probably not be considered a high quality program by most in the field.

As shown in Figure 2, the initial passing rates for programs varied wildly across the NCTQ star rankings. For example, a program receiving zero stars had an 81.6% passing rate while a program receiving two stars had a passing rate of 38.9%. NCTQ would have us believe the first program is substantially worse than the second program—so much worse, in fact, that NCTQ issued a consumer alert about the first program. Similarly, a program that received 1.5 stars had a passing rate of 93.5% while another program that also received 1.5 stars had a passing rate of 16.7%. NCTQ would have us believe the two programs have the same level of quality with respect to preparing elementary teachers. A simple calculation of the correlation between the number of stars and percent passing revealed a coefficient of 0.178 that was not statistically significant.

Figure 2:Percentage of Program Graduates Passing Certification Exams on the Initial Attempt by NCTQ Star Ratings

Further, NCTQ could have used the results from Goldhaber and Liddle (2011) to examine the relationship between the NCTQ stars and student value-added scores for individual preparation programs in Washington. Ironically, NCTQ cited a version of this paper (identified as “Goldhaber, D., et al., ‘Assessing Teacher Preparation in Washington State Based on Student Achievement” presented at Association for Public Policy Analysis & Management conference”) as evidence that programs differ in terms of their impact on student achievement. Yet, they failed to use the information in the paper to check the validity of their own findings. If they had done so, they would have discovered that both the University of Washington-Bothell and Eastern Washington earned one star from NCTQ, but the value-added scores for the University of Washington-Bothell were statistically significantly greater than for Eastern Washington in both reading and mathematics. The difference was particularly large in reading. If we simply accept the NCTQ review at face value, we would assume the two programs are of equal quality when methods to assess effectiveness specifically endorsed by NCTQ clearly show the two programs are not equally effective. Again, these results cast serious doubt on the NCTQ ranking system—even when using a method endorsed by NCTQ. A number of other programs in Washington also received a one star ranking from NCTQ, but there are statistically significant differences between many of the programs.

Similarly, NCTQ failed to mention the findings from the Koedel, Parsons, Podgursky, & Ehlert study from 2012 that found there was very little variation in program effectiveness as measured by student test scores across preparation programs and that most of the variation in effectiveness occurred within programs. Despite this finding, NCTQ assigned a range of stars to Missouri programs. Again, the available evidence—using methods specifically endorsed by NCTQ—fails to substantiate NCTQ’s rankings.

Clearly, NCTQ ignored the available evidence that their rankings were inaccurate indicators of program quality. The failure of NCTQ to do so calls into question their ultimate intent in publishing the rankings. Indeed, if their intent was to improve preparation programs, one would assume they would have done due diligence in ensuring their rankings has some relationship to outcomes.Reporters should question NCTQ about why their rankings do not align with research on program outcomes and why such evidence was ignored.

Conclusions

As shown above, there are a number of very serious problems with the NCTQ report. These issues range from the rationale for the review’s standards to various methodological problems. Myriad other problems with the review exist that are well documented by others elsewhere.

Most disturbingly, the star ranking system does not even appear to be associated with the very program outcomes such as licensure/certification test passing rates or the aggregate value-added scores in reading or mathematics of programs that NCTQ purports should be used to hold programs accountable. NCTQ’s refusal to even attempt to validate their own effort gives substantial support to those who believe NCTQ has absolutely no intention of helping traditional university-based programs and has every intention of destroying such programs and replacing them with a market-based system of providers.

As I show in this paper and elsewhere (See Vigdor & Fuller, 2012), Texas went down that route and the results were not pretty. Specifically, for-profit alternative programs in Texas allowed individuals with less than a 2.0 undergraduate GPA to enter programs. These same programs, not surprisingly, had abysmally low passing rates on the state certification examinations. The programs even allowed uncertified individuals to enter the classroom and instruct students. Does NCTQ really believe a wild west free market system will increase the quality of the preparation of teachers and improve student outcomes?

If NCTQ wants to truly help improve student outcomes by improving teacher preparation, they should stop using incredible weak methods, unsubstantiated standards, and unethical evaluation strategies to shame programs and start working with programs to build a stronger research base and information system that can be used by programs to improve practice.

Yes, teacher preparation has room for improvement, but throwing rocks from a glass house is not helpful to anyone but their own organization and the organizations funding the study.

Given the very shaky foundation upon which the NCTQ review was built and the shaky motives of NCTQ in conducting the review, the entire review should be discounted by educators, policymakers, and the public.

In the end, NCTQ chose the pathway that rejected the voices of those educators highly committed to improving teacher preparation and chose to highlight their own voices and agenda instead. This has damaged any sense of partnership between teacher preparation programs and NCTQ. As such, funding should be provided to organizations truly committed to the improvement of teacher preparation rather to those that care mostly about their own level of influence.

REFERENCES

American Association of Colleges of Teacher Education . (June 18, 2013). NCTQ Review of Nation’s Education Schools Deceives, Misinforms Public. Washington, DC: Author. Retrieved at: http://aacte.org/news-room/press-releases/nctq-review-of-nations-education-schools-deceives-misinforms-public.html

Darling-Hammond, L. (June 19, 2013). Why the NCTQ teacher prep ratings are nonsense. Palo Alto, CA: Stanford Center for Opportunity Policy in Education.

Dooley, C.M., Meyer, C., Ikpeze, C., O’Byrne, I., Kletzien, S., Smith-Burke, T., Casbergue, R. & Dennis, D. LRA Response to the NCTQ Review of Teacher Education Programs. Retrieved at: http://www.literacyresearchassociation.org/pdf/LRA%20Response%20to%20NCTQ.pdf

Coggshall, J.G., Bivona, L., & Reschly, D.J. (2012). Evaluating the effectiveness of teacher preparation programs for support and accountability. Washington, DC: National Comprehensive Center for Teacher Quality.

Eduventures (June 18, 2013). A review and critique of the National Council on Teacher Quality (NCTQ) methodology to rate schools of education. Author. Retrieved at: http://www.eduventures.com/2013/06/a-review-and-critique-of-the-national-council-on-teacher-quality-nctq-methodology-to-rate-schools-of-education/

Elliot, P. (June 18, 2013). Too many teachers, too little quality. Yahoo News. Retrieved at: http://news.yahoo.com/report-too-many-teachers-too-little-quality-040423815.html

Goldhaber, D., Liddle, S. (2011). The gateway to the profession: Assessing teacher preparation programs based on student achievement.Working Paper No. 2011-2.0.Seattle, WA: Center for Education Data & Research.

Goldhaber, D. (2007). Everyone’s doing it, but what does teacher testing tell us about teacher effectiveness? Journal of Human Resources, 42(4), 765–794.

Harris, D. N. & Sass, T. R.(2011). Teacher training, teacher quality, and student achievement. Journal of Public Economics, 95(7), 798–812.

Kamras, J. & Rotherham, A. (2007). America’s teaching crisis. Democracy. Retrieved at: http://www.democracyjournal.org/5/6535.php?page=all

Koedel, C., Parsons, E., Podgursky, M., & Ehlert, M. (2012). Teacher Preparation Programs and Teacher Quality: Are There Real Differences Across Programs?. National Center for Analysis for Longitudinal Data in Education Research, Working Paper, 79.

Layton, L. (June 18, 2013). University programs that train U.S. teachers get mediocre marks in first-ever ratings. The Washington Post. Retrieved at: http://www.washingtonpost.com/local/education/university-programs-that-train-us-teachers-get-mediocre-marks-in-first-ever-ratings/2013/06/17/ab99d64a-d75b-11e2-a016-92547bf094cc_story.html

Levine, A. (June 21, 2013). Fixing how we train U.S. teachers. The Hechinger Report. Retrieved at: http://hechingerreport.org/content/fixing-how-we-train-u-s-teachers_12449/

Monk, D. H. (1994). Subject Area Preparation of Secondary Mathematics and Science Teachers and Student Achievement. Economics of Education Review, 13, 125-145

National Council on Teacher Quality Audit Panel (2013). Audit Panel statement on the NCTQ Teacher Prep Review. Washington, DC: National Council on Teacher Quality. Document retrieved at: http://nctq.org/dmsView.do?id=2181

National Council on Teacher Quality. (2013). Teacher Prep Review. Washington, DC: Author.

National Council on Teacher Quality. (2013b).Standards. Washington, DC: Author. Retrieved at: http://www.nctq.org/teacherPrep/ourApproach/standards/.

Sanchez, C. (June 18, 2013). Study: Teacher prep programs get failing marks. National Public Radio. Retrieved at: http://www.npr.org/2013/06/18/192765776/study-teacher-prep-programs-get-failing-marks

Vigdor, J. & Fuller, E.J. (2012). Examining Teacher Quality in Texas. Unpublished expert witness report for Texas school finance court case:Texas Taxpayer and Student Fairness Coalition v. Robert Scott and State of Texas.

This blog post has been shared by permission from the author.

Readers wishing to comment on the content are encouraged to do so via the link to the original post.

Find the original post here:

The views expressed by the blogger are not necessarily those of NEPC.