VAMboozled!: The Danielson Framework: Evidence of Un/Warranted Use

The US Department of Education’s statistics, research, and evaluation arm — the Institute of Education Sciences — recently released a study (here) about the validity of the Danielson Framework for Teaching‘s observational ratings as used for 713 teachers, with some minor adaptations (see box 1 on page 1), in the second largest school district in Nevada — Washoe County School District (Reno). This district is to use these data, along with student growth ratings, to inform decisions about teachers’ tenure, retention, and pay-for-performance system, in compliance with the state’s still current teacher evaluation system. The study was authored by researchers out of the Regional Educational Laboratory (REL) West at WestEd — a nonpartisan, nonprofit research, development, and service organization.

As many of you know, principals throughout many districts throughout the US, as per the Danielson Framework, use a four-point rating scale to rate teachers on 22 teaching components meant to measure four different dimensions or “constructs” of teaching.

In this study, researchers found that principals did not discriminate as much among the individual four constructs and 22 components (i.e., the four domains were not statistically distinct from one another and the ratings of the 22 components seemed to measure the same or universal cohesive trait). Accordingly, principals did discriminate among the teachers they observed to be more generally effective and highly effective (i.e., the universal trait of overall “effectiveness”), as captured by the two highest categories on the scale. Hence, analyses support the use of the overall scale versus the sub-components or items in and of themselves. Put differently, and In the authors’ words, “the analysis does not support interpreting the four domain scores [or indicators] as measurements of distinct aspects of teaching; instead, the analysis supports using a single rating, such as the average over all [sic] components of the system to summarize teacher effectiveness” (p. 12).

In addition, principals also (still) rarely identified teachers as minimally effective or ineffective, with approximately 10% of ratings falling into these of the lowest two of the four categories on the Danielson scale. This was also true across all but one of the 22 aforementioned Danielson components (see Figures 1-4, p. 7-8); see also Figure 5, p. 9).

I emphasize the word “still” in that this negative skew — what would be an illustrated distribution of, in this case, the proportion of teachers receiving all scores, whereby the mass of the distribution would be concentrated toward the right side of the figure — is one of the main reasons we as a nation became increasingly focused on “more objective” indicators of teacher effectiveness, focused on teachers’ direct impacts on student learning and achievement via value-added measures (VAMs). Via “The Widget Effect” report (here), authors argued that it was more or less impossible to have so many teachers perform at such high levels, especially given the extent to which students in other industrialized nations were outscoring students in the US on international exams. Thereafter, US policymakers who got a hold of this report, among others, used it to make advancements towards, and research-based arguments for, “new and improved” teacher evaluation systems with key components being the “more objective” VAMs.

In addition, and as directly related to VAMs, in this study researchers also found that each rating from each of the four domains, as well as the average of all ratings, “correlated positively with student learning [gains, as derived via the Nevada Growth Model, as based on the Student Growth Percentiles (SGP) model; for more information about the SGP model see here and here; see also p. 6 of this report here], in reading and in math, as would be expected if the ratings measured teacher effectiveness in promoting student learning” (p. i). Of course, this would only be expected if one agrees that the VAM estimate is the core indicator around which all other such indicators should revolve, but I digress…

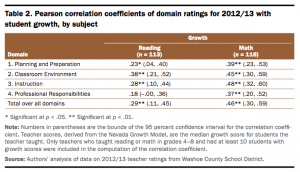

Anyhow, researchers found that by calculating standard correlation coefficients between teachers’ growth scores and the four Danielson domain scores, that “in all but one case” [i.e., the correlation coefficient between Domain 4 and growth in reading], said correlations were positive and statistically significant. Indeed this is true, although the correlations they observed, as aligned with what is increasingly becoming a saturated finding in the literature (see similar findings about the Marzano observational framework here; see similar findings from other studies here, here, and here; see also other studies as cited by authors of this study on p. 13-14 here), is that the magnitude and practical significance of these correlations are “very weak” (e.g., r = .18) to “moderate” (e.g., r = .45, .46, and .48). See their Table 2 (p. 13) with all relevant correlation coefficients illustrated below.

Regardless, “[w]hile th[is] study takes place in one school district, the findings may be of interest to districts and states that are using or considering using the Danielson Framework” (p. i), especially those that intend to use this particular instrument for summative and sometimes consequential purposes, in that the Framework’s factor structure does not hold up, especially if to be used for summative and consequential purposes, unless, possibly, used as a generalized discriminator. With that too, however, evidence of validity is still quite weak to support further generalized inferences and decisions.

So, those of you in states, districts, and schools, do make these findings known, especially if this framework is being used for similar purposes without such evidence in support of such.

Citation: Lash, A., Tran, L., & Huang, M. (2016). Examining the validity of ratings

from a classroom observation instrument for use in a district’s teacher evaluation system

REL 2016–135). Washington, DC: U.S. Department of Education, Institute of Education Sciences, National Center for Education Evaluation and Regional Assistance, Regional Educational Laboratory West. Retrieved from http://ies.ed.gov/ncee/edlabs/regions/west/pdf/REL_2016135.pdf

This blog post has been shared by permission from the author.

Readers wishing to comment on the content are encouraged to do so via the link to the original post.

Find the original post here:

The views expressed by the blogger are not necessarily those of NEPC.