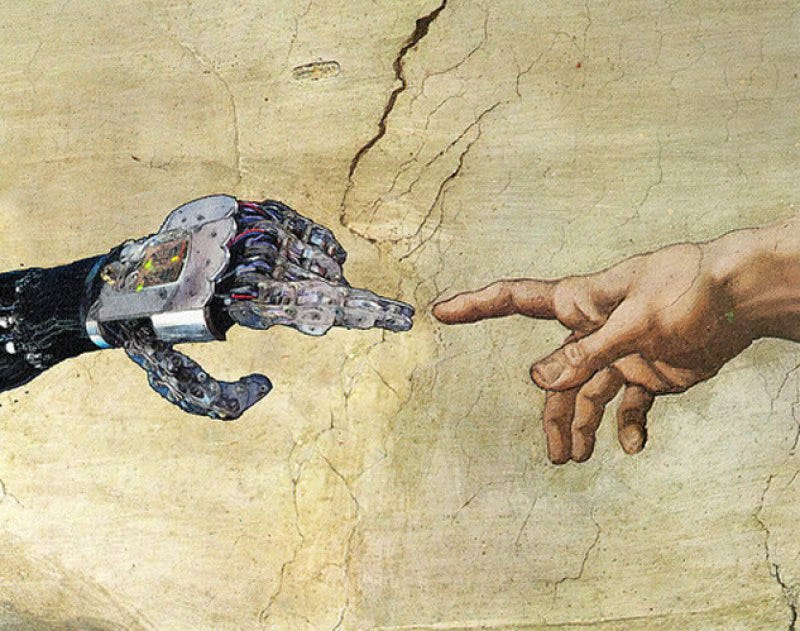

Letters from the Future (of Learning): The Future of A.I. is . . . I.A.?

Did you hear?

Starting this fall, Apple is upping its A.I. game -- with a twist.

In typical company fashion, A.I. will no longer stand for Artificial Intelligence, but Apple Intelligence, which the company will build into all your devices “to help you write, express yourself, and get things done effortlessly.”

Powered by a new partnership with OpenAI (and ChatGPT), Apple’s Siri will soon be able to draw on “all-new superpowers. Equipped with awareness of your personal context, Siri will be able to assist you like never before.”

I mean, what’s not to like?

And, to be clear, it may be great. But I can’t stop hearing the alarm Tyler Austin Harper just sounded about “The Big AI Risk Not Enough People are Seeing.”

For him (and me), our growing infatuation with AI’s ability to do the hard stuff of being human -- from choosing the right song to the right phrase to, well, Mr. or Mrs. Right -- is, ominously, “a window into a future in which people require layer upon layer of algorithmic mediation between them in order to carry out the most basic of human interactions: those involving romance, sex, friendship, comfort, food.”

And that, Harper proclaims, is "a harbinger of a world of algorithms that leave people struggling to be people without assistance. We have entered the Age of Disabling Algorithms, as tech companies simultaneously sell us on our existing anxieties and help nurture new ones. Like an episode out of Black Mirror, the machines have arrived to teach us how to be human even as they strip us of our humanity."

Yikes.

And it’s not just Tyler Austin Harper sounding the alarm. “We’re seeing a general trend of selling AI as ‘empowering,’ a way to extend your ability to do something, whether that’s writing, making investments, or dating,” said Leif Weatherby, an expert on the history of AI development. “But what really happens is that we become so reliant on algorithmic decisions that we lose oversight over our own thought processes and even social relationships. The rhetoric of AI empowerment is sheep’s clothing for Silicon Valley wolves who are deliberately nurturing the public’s dependence on their platforms.”

In which case, what’s a sheep to do?

For Harper, the answer lies in adopting “a more sophisticated approach to artificial intelligence, one that allows us to distinguish between uses of AI that legitimately empower human beings and those—like hypothetical AI dating concierges—that wrest core human activities from human control. We need to be able to make granular, rational decisions about which uses of artificial intelligence expand our basic human capabilities, and which cultivate incompetence and incapacity under the guise of empowerment."

His point reminded me of a recent conversation with my friend Jennifer Stauffer, a veteran educator of the highest order who’s currently taking a grad course on A.I.

One of her professors, Bodong Chen, shared a valuable way of thinking about technology. It augments intelligence, he argues, creating complex interactive systems that are both beyond the human and beyond the technology.

Consequently, to effectively harness AI and mitigate the risks, we shouldn't focus on AI per se -- but on IA (intelligence augmentation).

This is not a new idea. Back in the 1960s, Stanford professor Douglas Engelhardt proposed an augmentation of human intelligence that centers around the H-LAM/T-model (human-language-artifact-methodology-training).

Tools change the human and vice versa, and whatever the tool source (from fire to AI), we need to pay close attention to the system in which the human operates with the tool.

As Jennifer explained to me, “Dr. Chen mentioned the previous shift in education with smart boards and later with wikipedia to emphasize that there are cycles of stories with new technologies in education that give insights about the systems in place and how IA was impacted. Ultimately, educators respond, interact, augment their intelligence, and provide a design space to create the next narrative, the next idea.”

Perhaps the way forward, then, is not to curl into a fetal position, but, as Jennifer suggested, to ask ourselves the following questions:

How does AI impact IA and vice versa? How should it impact IA? What new design space is available to us? And how do we effectively and ethically take advantage of that new design space?

Y/our move, homo sapiens . . .

This blog post has been shared by permission from the author.

Readers wishing to comment on the content are encouraged to do so via the link to the original post.

Find the original post here:

The views expressed by the blogger are not necessarily those of NEPC.